Contents in the post based on the free Coursera Machine Learning course, taught by Andrew Ng.

1. Intuition

When you use Regularization, Suppose we penalize and make ![]() ,

, ![]() really small. To do so, We will add a new term to the original optimization objective.

really small. To do so, We will add a new term to the original optimization objective.

e.g.)

1.1 Make parameters  ,

, really small

really small

The only way that we can make the new cost function small is to make ![]() ,

, ![]() small. Therefore to minimize new function we’ll end up with

small. Therefore to minimize new function we’ll end up with ![]() .

.

![]()

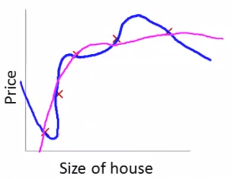

This is similar to removing two terms like the above picture. And then we end up with a quadratic function which is in good working order.

2. Regularization

The fact that we have small values for parameters ![]() means that 1) we have a simpler hypothesis and 2) less prone to overfitting.

means that 1) we have a simpler hypothesis and 2) less prone to overfitting.

We do not know which parameters to reduce in advance. Therefore we are going to modify cost function, to shrink all of our parameters. Specifically, We will add a regularization term(= ).

).

* We are going to not penalize ![]() being large. And this is kinda convention. And there is little difference whether we remove or remain the

being large. And this is kinda convention. And there is little difference whether we remove or remain the ![]() .

.

2.1 λ

λ is a regularization parameter that controls a trade-off between two goals.

- Goal 1) Fit the training data well.

- Goal 2) Keep the parameters small.

∴ We could keep the hypothesis simper to avoid overfitting.